ChatGPT API - How to Get Started?

We all love using ChatGPT. But have you ever wondered how cool it would be to build something of your own using the same technology? Well, that’s exactly what we’re going to do!

ChatGPT uses models like GPT-4, GPT-4o Mini, and GPT-3.5, and ChatGPT API enables us to integrate these powerful models into our own projects. We can create a content-writing assistant, an automation tool, or something entirely unique—the possibilities are endless.

In this blog, I’ll walk you through the process of connecting to the ChatGPT API and help understand its nuances.

Using ChatGPT API – Prerequisites

✅ Python: It’s recommended to use Python 3.8 or later for compatibility and stability.

✅ Python IDE: You can use Jupyter Notebooks, VS Code, PyCharm, or any other IDE of your choice.

PS: You don’t need to be a data science expert to follow along! Enthusiasm and a willingness to experiment are all you need. 😄

So, let’s dive in and start building! 🚀

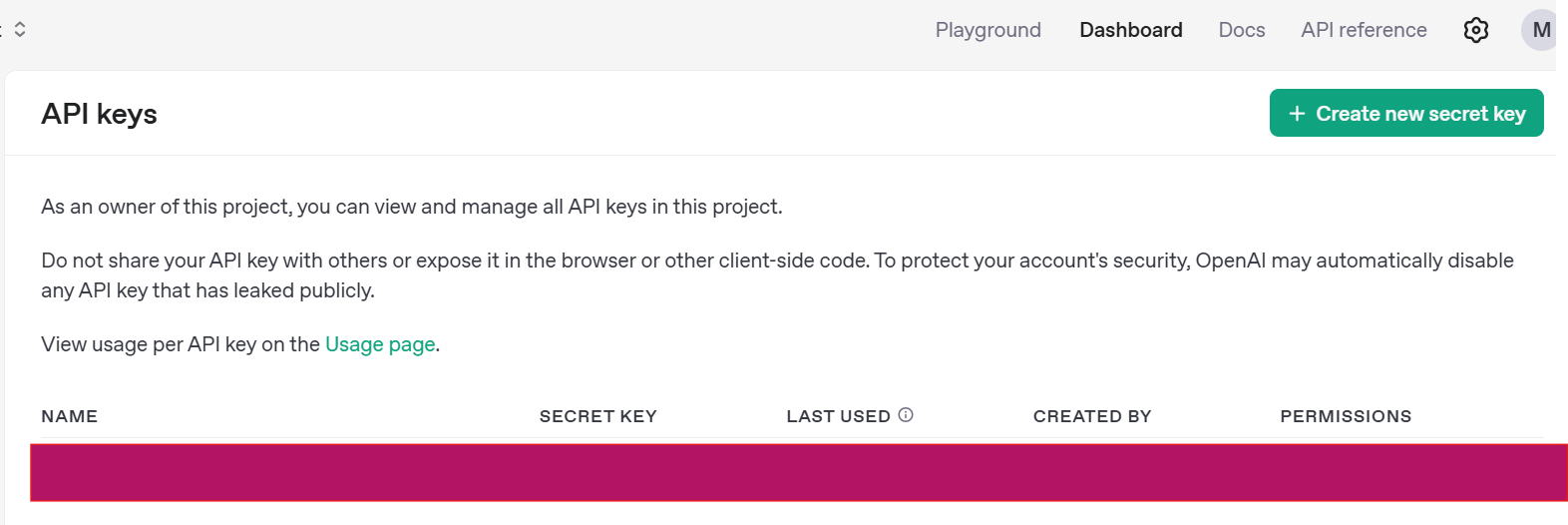

Step 1: Get API Access

To use the ChatGPT API, you need an OpenAI API key. To do this,

- Sign up on OpenAI – If you don’t already have an account, create one at OpenAI's website.

- Get an API Key – Navigate to the API section and generate a new API key.

- Store it Securely – Store it safely and Do NOT share your API key publicly.

Once your API Key is set up, you will find it in the API Keys section of your account and you can also monitor its usage.

Step 2: Install Required Libraries

To interact with the API, you need to install the openai package if it doesn't already exist.

pip install openai

from openai import OpenAIStep 3: Create your First Request

client = OpenAI(

api_key="Write your key here"

)

completion = client.chat.completions.create(

model="gpt-4o-mini",

store=True,

messages=[

{"role": "user", "content": "most popular non-fiction book"}

],

max_tokens=50,

)

print(completion.choices[0].message);

Understanding the Code & Response

1️⃣ Why Use GPT-4o Mini?

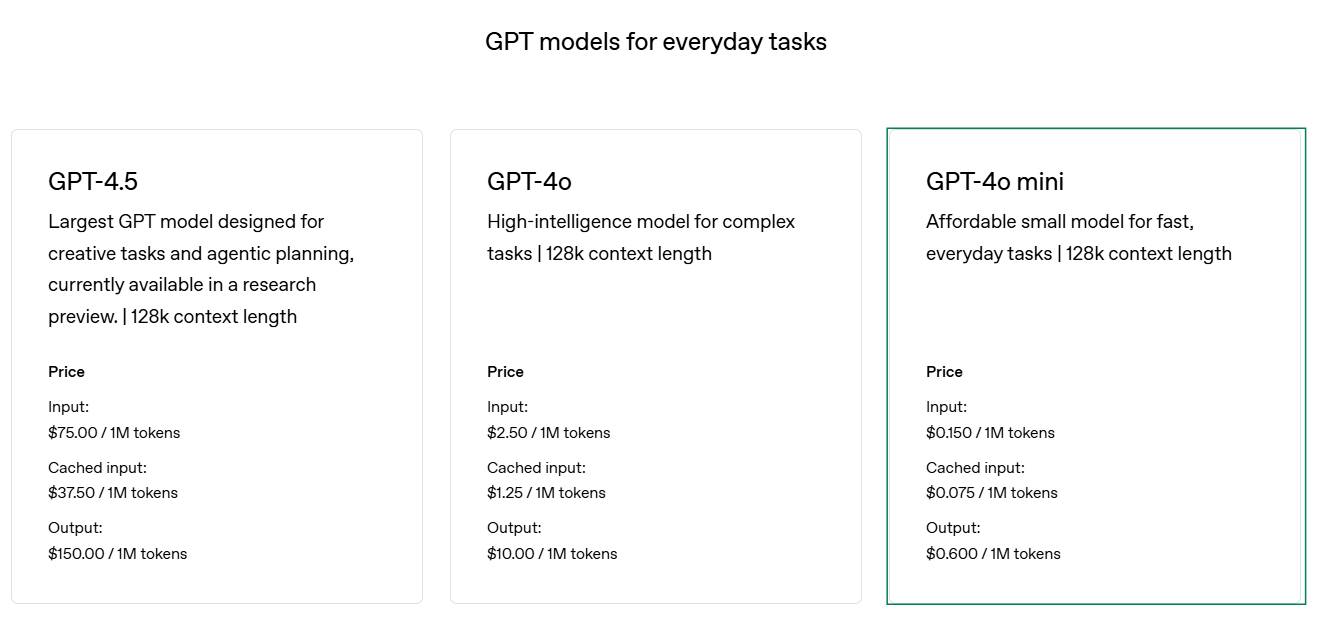

- The ChatGPT API is NOT free—each request has a cost based on:

🔹 The model you choose.

🔹 The number of input and output tokens used. - GPT-4o Mini is one of the most cost-efficient models released by OpenAI in mid-2024.

- It is trained on data until 2023, which is why our response included:

“As of my last update in October 2023...”

📌 Official OpenAI Pricing: OpenAI API Pricing

📌 Detailed Cost Breakdown: OpenAI Pricing Docs

2️⃣Other Key Parameters Used

Let’s break down the key parts of the request:

Messages - In our case, we’re sending a single message from the user, asking: "What is the most popular non-fiction book?"

messages=[{"role": "user", "content": "What is the most popular non-fiction book?"}]

This is the conversation history you send to the model. The API follows a role-based messaging format:

role: "system"→ Provides instructions for the assistant (optional). For example, we can specify GPT to act as a book reviewer.

messages=[{"role": "system", "content": "You are a popular book reviewer."},

{"role": "user", "content": "What is the most popular non-fiction book?"}]role: "user"→ Represents what the user asks.role: "assistant"→ Holds previous responses from the AI (optional, useful for multi-turn conversations).

Max Tokens - Tokens are chunks of words or characters (e.g., “ChatGPT” = 1 token, “Hello, how are you?” ≈ 6 tokens)

max_tokens=50 # Limit the response length

We specified the model to return a maximum of 50 tokens. If we had not specified this, the model could have generated a much longer response, consuming more tokens and incurring higher costs. So, it's always a good practice to use this parameter when making a request.

Printing response - When we make a request to the ChatGPT API, the response comes in a structured JSON format. The completion.choices list contains one or more responses generated by the model.

{

"choices": [

{

"finish_reason": "length",

"index": 0,

"logprobs": null,

"message": {

"role": "assistant",

"content": "As of my last update in October 2023, one of the most popular non-fiction books in recent years is 'Educated' by Tara Westover. This memoir chronicles the author's quest for knowledge and self-discovery, detailing her upbringing in a..."

},

"refusal": null,

"audio": null,

"function_call": null,

"tool_calls": null

}

]

}

Each choice has a message object, which includes the AI’s response. choices[0] selects the first response generated by the model.

More experimentation

There are other parameters as well that we can use to model the response such as temperature, top_p, frequency_penalty, presence_penalty and a lot more.

# Create a request to the ChatGPT API

response = openai.ChatCompletion.create(

model="gpt-4", # Specifies the language model to use ("gpt-4" or "gpt-3.5-turbo")

messages=[

{"You are a popular book reviewer."},

{"role": "user", "content": "What is the most popular non-fiction book?"}]

],

max_tokens=50, # Limits the response length

temperature=0.7, # Controls randomness (0 = deterministic, 1 = highly random)

top_p=1.0, # Nucleus sampling for response diversity (1.0 considers all possible tokens)

frequency_penalty=0, # Adjusts likelihood of repetitive words

presence_penalty=0 # Adjusts likelihood of introducing new topics

)

# Print the AI's response

print(response["choices"][0]["message"]["content"])

Let’s understand these parameters with simple, real-world examples.

1. temperature

Controls randomness in responses.

🔹 Think of it like choosing a movie script style.

temperature = 0.2→ Predictable and factual (Documentary)temperature = 0.8→ Creative and unpredictable (Fantasy movie)

📝 Example in text generation:

Prompt: "Give me a sentence about the sky."

temperature = 0.2: "The sky is blue during the day and dark at night."temperature = 0.8: "The sky dances with golden hues as the sun sets beyond the horizon."

🔖 When to use?

- If you need precise answers (math, coding) → Lower temperature.

- If you want creative responses (storytelling, brainstorming) → Higher temperature.

2. top_p

Controls how many words the model considers before choosing the next one.

🔹 Think of it like choosing food at a buffet.

- If

top_p = 1.0, you consider all available dishes. (More variety, but also more randomness) - If

top_p = 0.5, you only pick from the top 50% most popular dishes. (More focused choices)

📝 Example in text generation:

Prompt: Write a sentence about the ocean.

top_p = 1.0: The ocean is vast, mysterious, and home to countless species.top_p = 0.5: The ocean is deep and full of life.

🔖When to use? If you want more controlled and predictable responses, lower top_p. If you want creativity, keep it higher.

3. frequency_penalty

Reduces the chances of repeating words or phrases.

🔹 Think of it like a conversation with someone who keeps repeating themselves.

frequency_penalty = 0.0→ No penalty, so the model might say:

"I love coffee. Coffee is my favorite. Drinking coffee makes me happy."frequency_penalty = 2.0→ The model avoids repetition:

"I love coffee. My favorite drink keeps me energized and happy."

🔖When to use? If the model keeps repeating itself, increase frequency_penalty to make responses more varied.

4. presence_penalty

Encourages or discourages the introduction of new topics.

🔹 Think of it like a dinner conversation.

- If

presence_penalty = 0.0, people stick to the same topic. - If

presence_penalty = 2.0, someone keeps bringing up new topics.

📝 Example in text generation:

Prompt: Tell me about the Eiffel Tower.

presence_penalty = 0.0: The Eiffel Tower is a famous landmark in Paris. It was built in 1889 and is a popular tourist attraction.presence_penalty = 2.0: The Eiffel Tower is a famous landmark in Paris. Speaking of landmarks, have you heard about the Statue of Liberty? It was a gift from France to the U.S.

📌 When to use?

- If you want the model to stick to the topic, keep it low.

- If you want it to introduce new ideas, increase it.

Final Analogy to Remember:

Imagine you're at a restaurant ordering food:

top_pis like choosing from a limited menu vs. having all options.frequency_penaltyis like telling the waiter not to suggest the same dish repeatedly.presence_penaltyis like asking the waiter to suggest new dishes or stick to your usual order.

Closing:

In this blog, we learnt how to establish a basic connection to the ChatGPT API, choose the right model, and understand key parameters used in the request. I hope you enjoyed it!

In the upcoming blogs, we’ll expand your learning and explore how to build our own AI-powered applications using this API. Stay tuned! ✨

References:

https://platform.openai.com/docs/guides/text-generation

https://platform.openai.com/docs/api-reference/chat/create